Nowadays, applications Developers’ve designed new tables and indexes in many projects due to DB Architects/DB performance expert’s unavailability. Often due to a lack of indexes, queries that were extremely fast when database tables have only thousands of rows will become quite slow when the tables have millions of rows.

In this blog, I will explain a simple, easy and most efficient way to speed up MySQL queries by application developers or MySQL DBA engineers.

MySQL Slow Query Log File:

Generally, MySQL Can save all slow queries into a file after enabling MySQL slow query log file. It is disabled by default and easy to enable using the following MySQL commands.

SET GLOBAL slow_query_log = 'ON';

SET GLOBAL long_query_time = 0.1;These MySQL configuration settings help to save all SQL Queries which has taken more than 100 milliseconds. To clarify, The MySQL variable long_query_time can control the time interval, you can change it if you want to increase or decrease. As for my knowledge, MySQL gives the best performance after optimizing all slow queries(>100ms) which have taken more than 100ms execution time.

After one day, the slow query log file has all queries which need query optimization. By default, MySQL creates the slow query log file in the data directory folder. Using the following command you get the exact location of the slow query log file.

show VARIABLES like '%slow_query_log_file%'MySQL Slow Query Log analyzing utilities & Tools

- mysqldumpslow , Pt-query-digest [utilities]

- SmartWorkbench Slow query log File analyzer [Advance tool with Query Optimization]

There are some utilities named sqldumpslow , Pt-query-digest which can consolidate slow query log files and generate a report. It has basic information such as Identical Queries execution frequency, execution time, Lock time etc. This information helps found topmost problematic slow queries. Generally, It is useful if your project has a DB architect or DB performance expert for Optimizing queries. But, It can’t help you to optimize SQL Queries which suppose to do by DB Performance Experts/ DB architects.

$ ./pt-query-digest slowquery.log

# 4.3s user time, 200ms system time, 39.12M rss, 4.12G vsz

# Current date: Thu Nov 29 10:02:45 2018

# Hostname: JJG.local

# Files: slowquery.log

# Overall: 4.51k total, 36 unique, 1.27 QPS, 15.65x concurrency __________

# Time range: 2018-11-27 21:00:23 to 21:59:38

# Attribute total min max avg 95% stddev median

# ============ ======= ======= ======= ======= ======= ======= =======

# Exec time 55640s 5s 118s 12s 16s 4s 13s

# Lock time 18446744073714s 34us 18446744073710s 4085657602s 260us 271453769812s 194us

# Rows sent 2.95M 0 103.99k 684.27 0 8.14k 0

# Rows examine 293.63M 0 18.67M 66.59k 0.99 583.21k 0.99

# Query size 44.63M 79 1.22M 10.12k 2.16k 97.85k 2.06k

# Profile

# Rank Query ID Response time Calls R/Call V/M

# ==== ============================= ================ ===== ======= =====

# 1 0x5AE6E128A4790517E5CFFD03... 52666.0213 94.7% 4363 12.0711 0.86 UPDATE some_table

# 2 0x222A6FC43B63B119D2C46918... 618.3909 1.1% 29 21.3238 1.91 UPDATE some_table

# 3 0xD217B90797E8F34704CF55AF... 463.7665 0.8% 29 15.9919 0.07 SELECT some_other_table some_other_other_tableSmartWorkbench SlowQueryLogFileAnalyser (SQA)

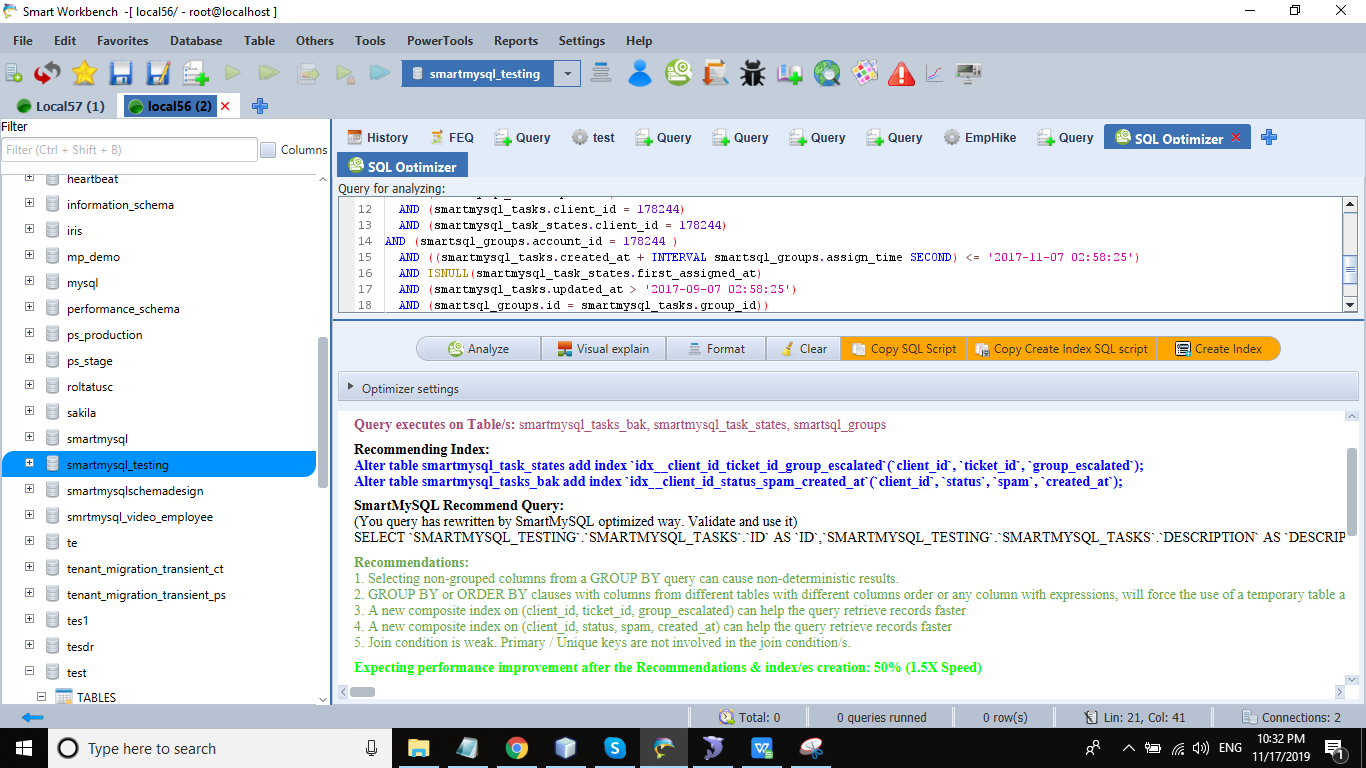

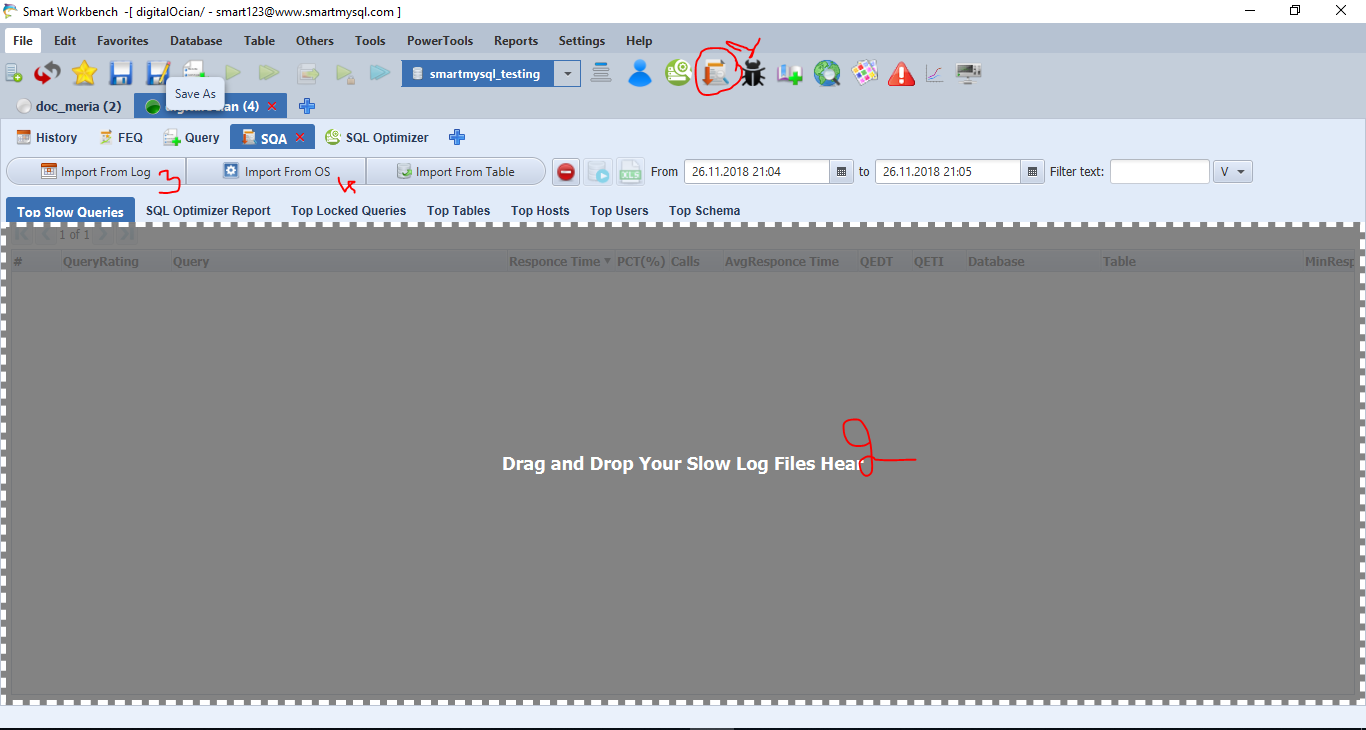

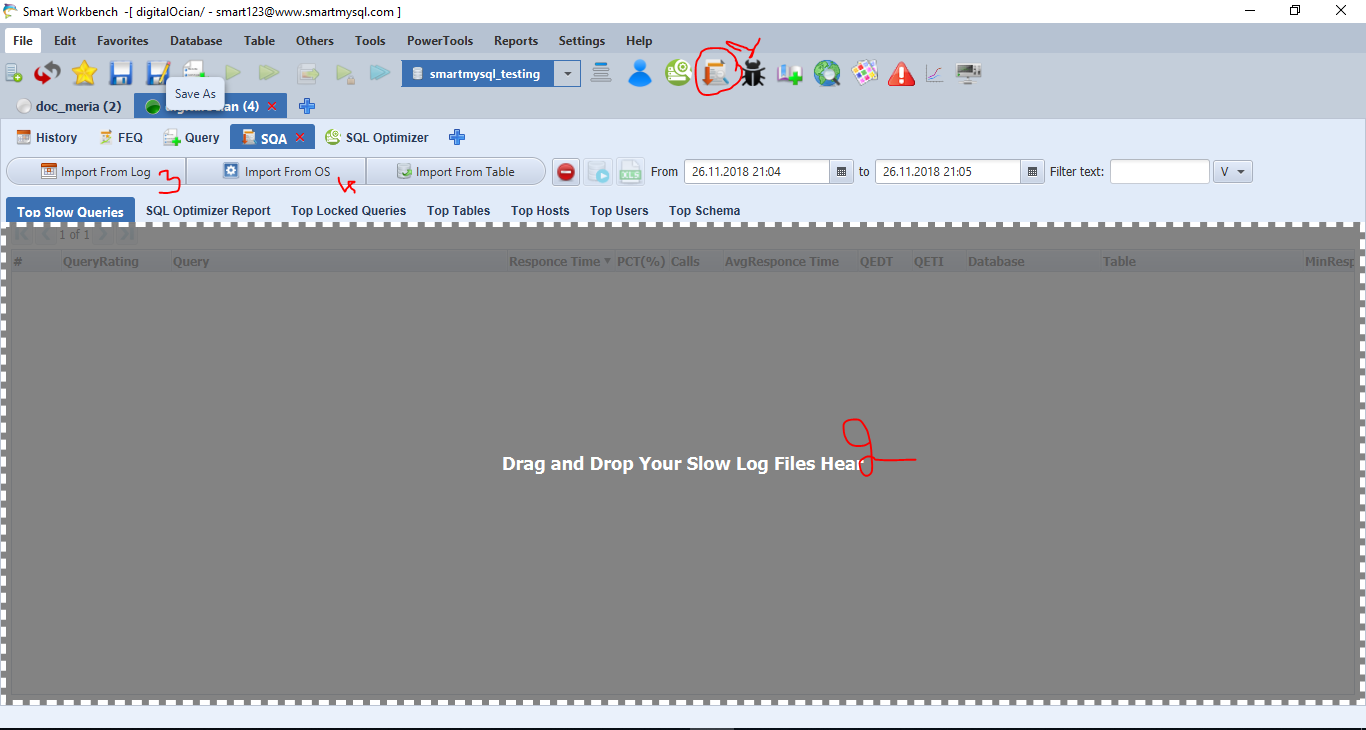

SmartWorkbench is the best tool for MySQL slow Query Log analysis and boosts slow running SQL queries performance. In addition, it can alert if you write such queries. Also, it can recommend the best indexes(optimal indexes) for your queries. In other words, the SmartWorkbench is easy and comfortable with automation and not needed any special skills to Optimize your SQL code.

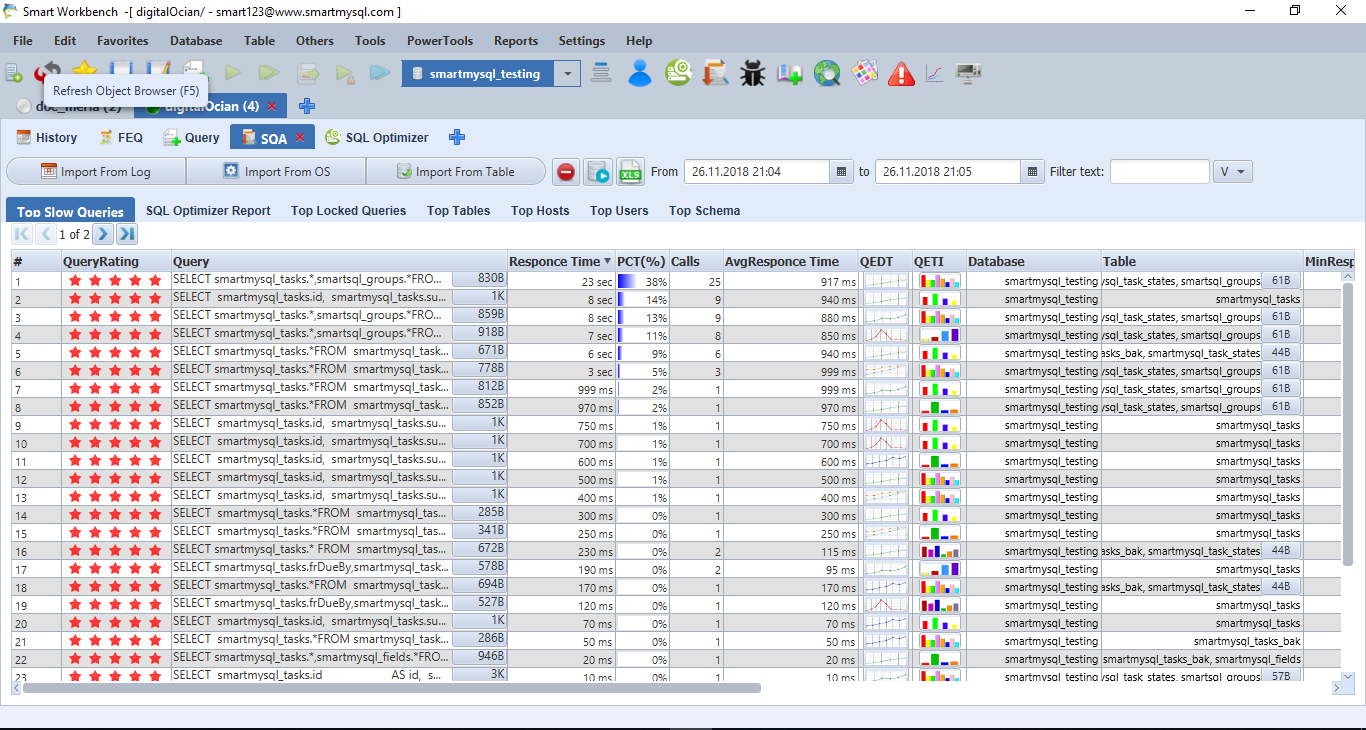

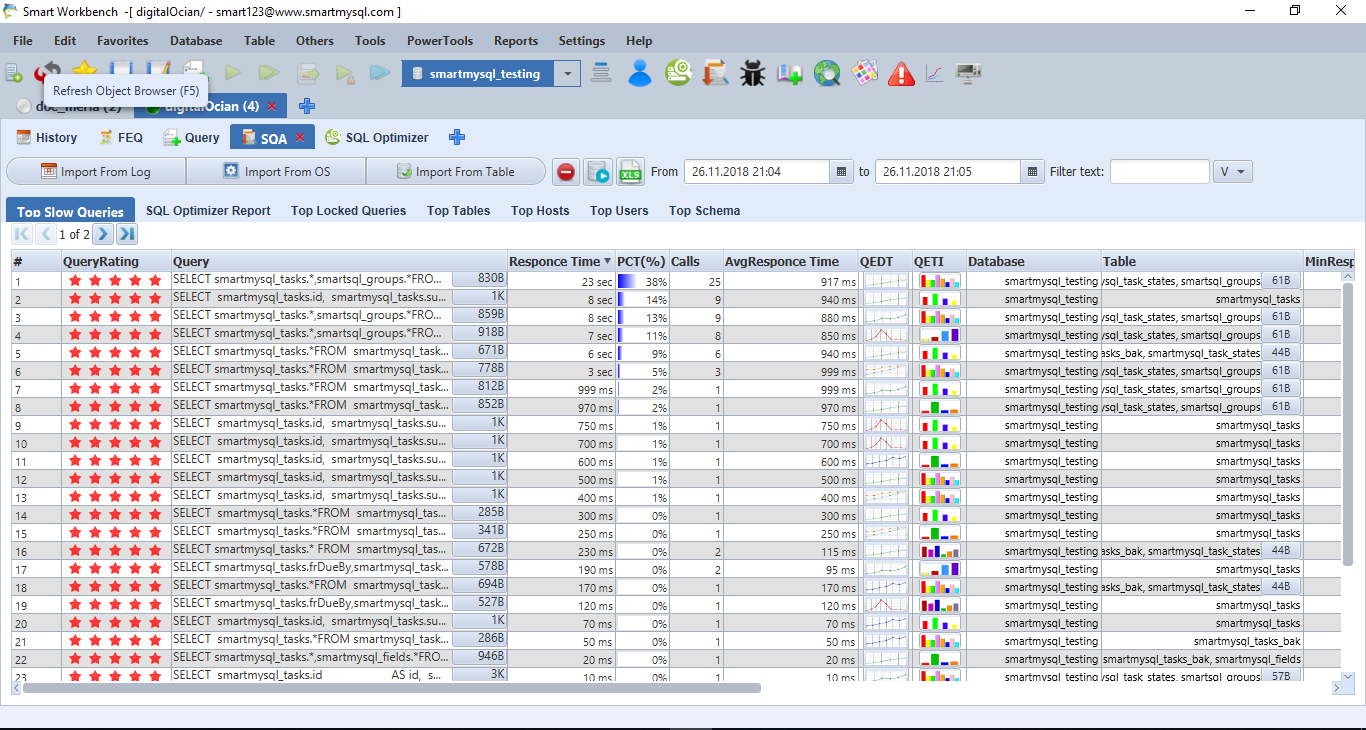

The SmartWorkbench has many advanced features and one of them is the Slow query log analyzer(1). It is a MySQL GUI tool that can connect to MySQL and pull a slow query log file(4) directly from OS if OS credits saved with the tool. Also, It allows you to drag and drop(2) or upload slow query log files(3).

To explain, It shows a consolidated report after submitting slow query log files with all keen observations including high frequency executed Queries, slow response queries, Queries execution time, lock time, total scanned records, total returned records, and Query ratting. In fact, It helps you to easily identify problematic queries, which are not having a good index.

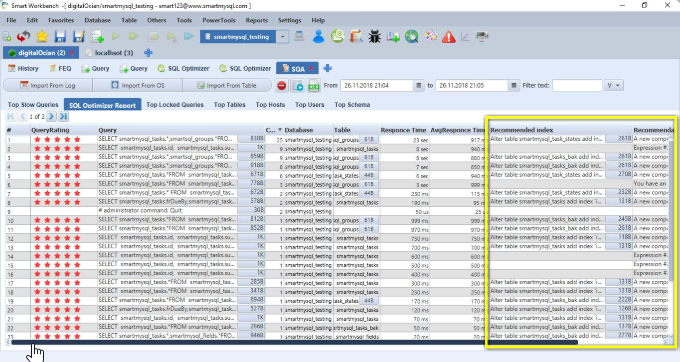

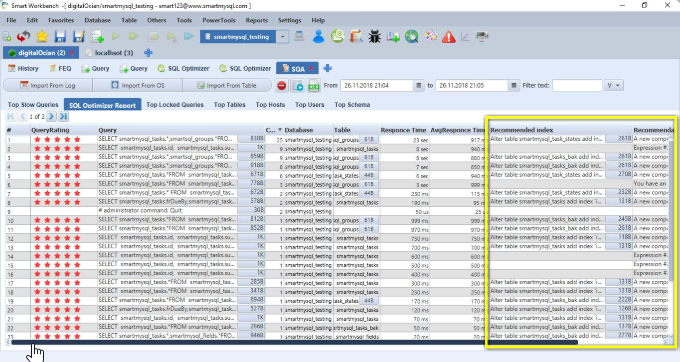

Optimizing all slow Queries at once

Most Importantly, It can do Query optimization for all slow queries and provide optimal indexes for all of them at once. Also, there is an option to download the report in excel format. As a result, you can observe significant overall DB performance after adding them. Also, It improves your application response time.

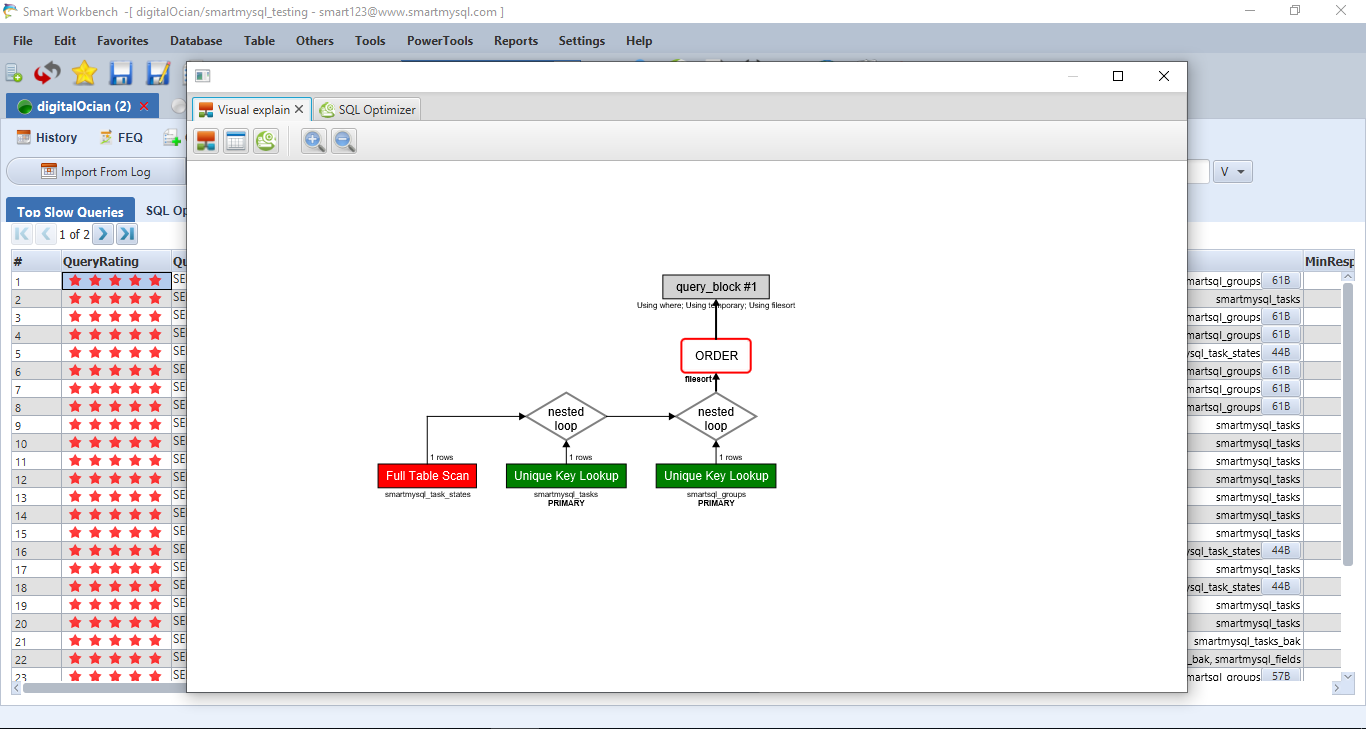

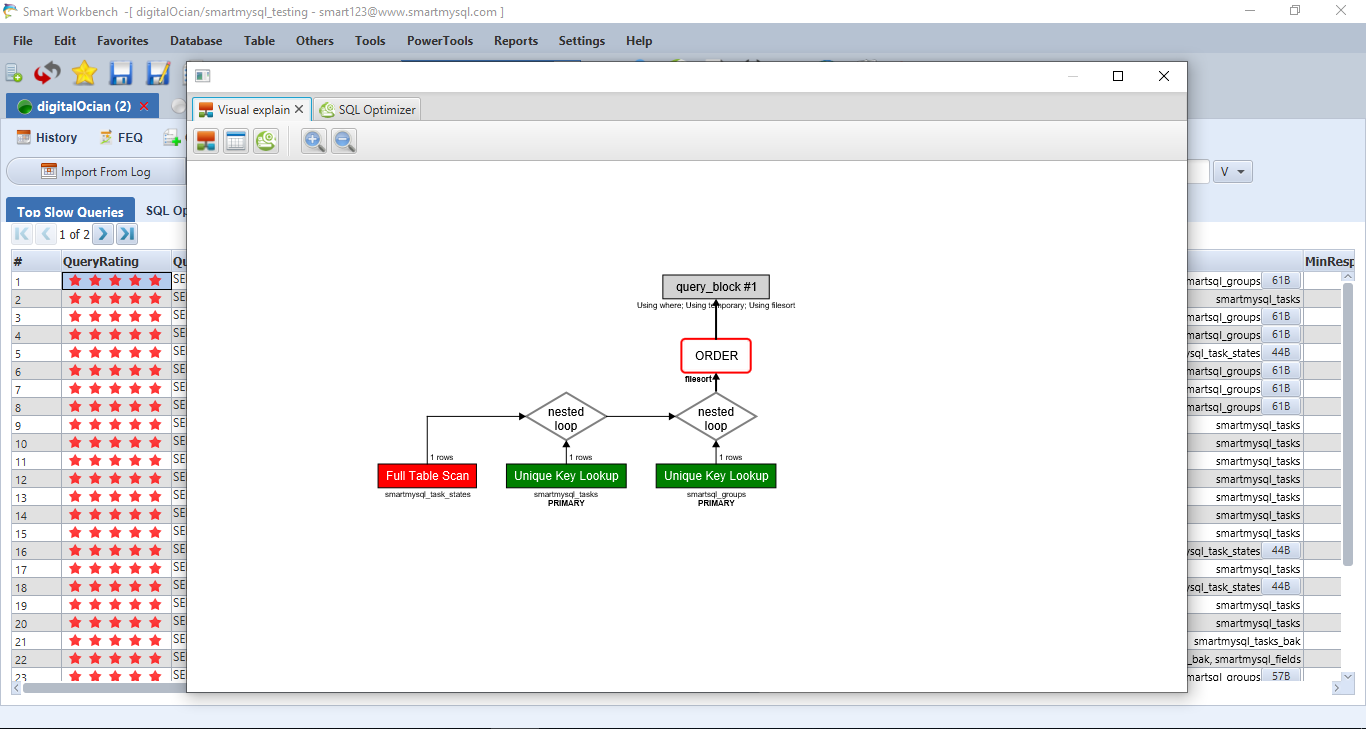

Also, It allows analyzing and optimizing selected queries if you double-click on the Query Rating / Explain columns in the report.

Query Rating it calculates query ratting based on how many rows scanning and how many rows it returns. The red stars indicate the Query is needed query Optimization.

Explain displays the Query execution plan in the table and visual diagram modes which is highly useful for Query execution plan analysis.

Identify & fix the problem at the root level:

In my view, SmartWorkbench is one of the MySQL advanced GUI tool has Query Optimization feature. In fact, SQL Queries should optimize for getting the best performance after developing them for your project. Generally, There are high chances to make mistakes in the Query optimization area and missing the optimal indexes if the query optimized done by nonprofessional. Generally, It supposed to be done by a DB performance expert or DB architect.

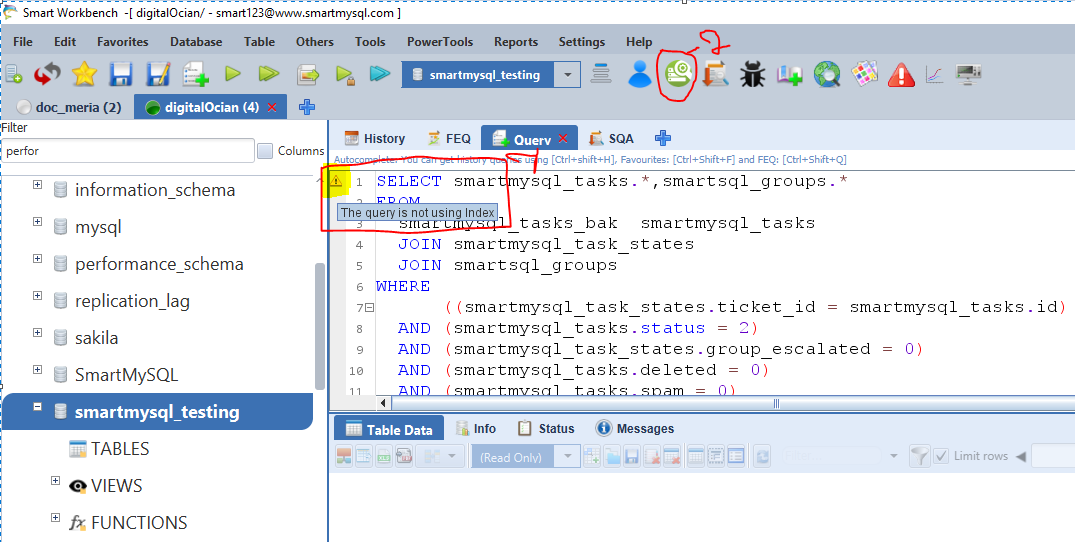

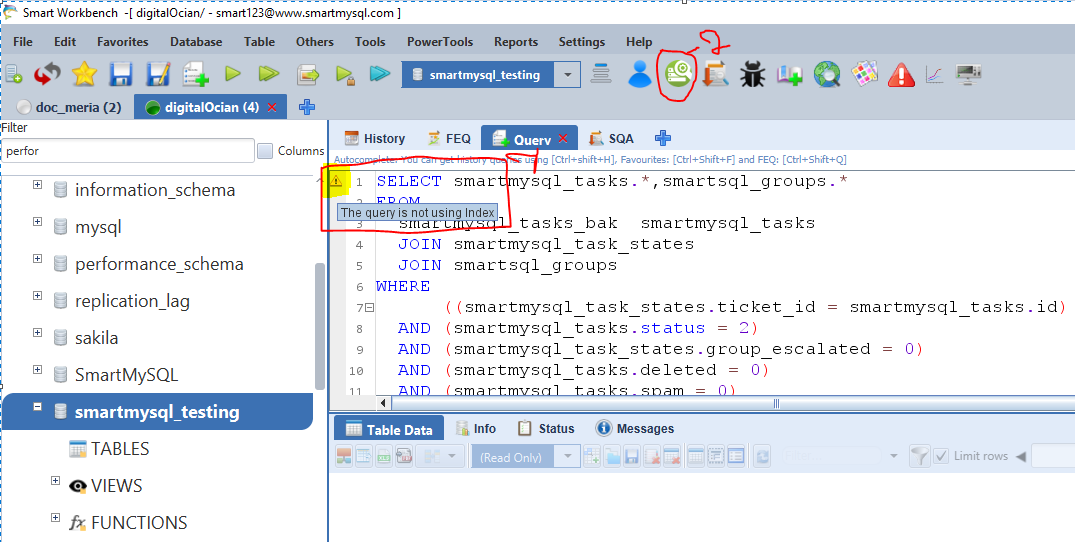

It is easy to identify such problems using SmartWorkbnech. It can trigger a warning after you have developed SQL query ends with a semicolon(;). Another key point, The tool automatically analyzes the query and gives a warning if the query doesn’t have an optimal index or if it goes for a full table scan in the MySQL optimizer Query execution plan.

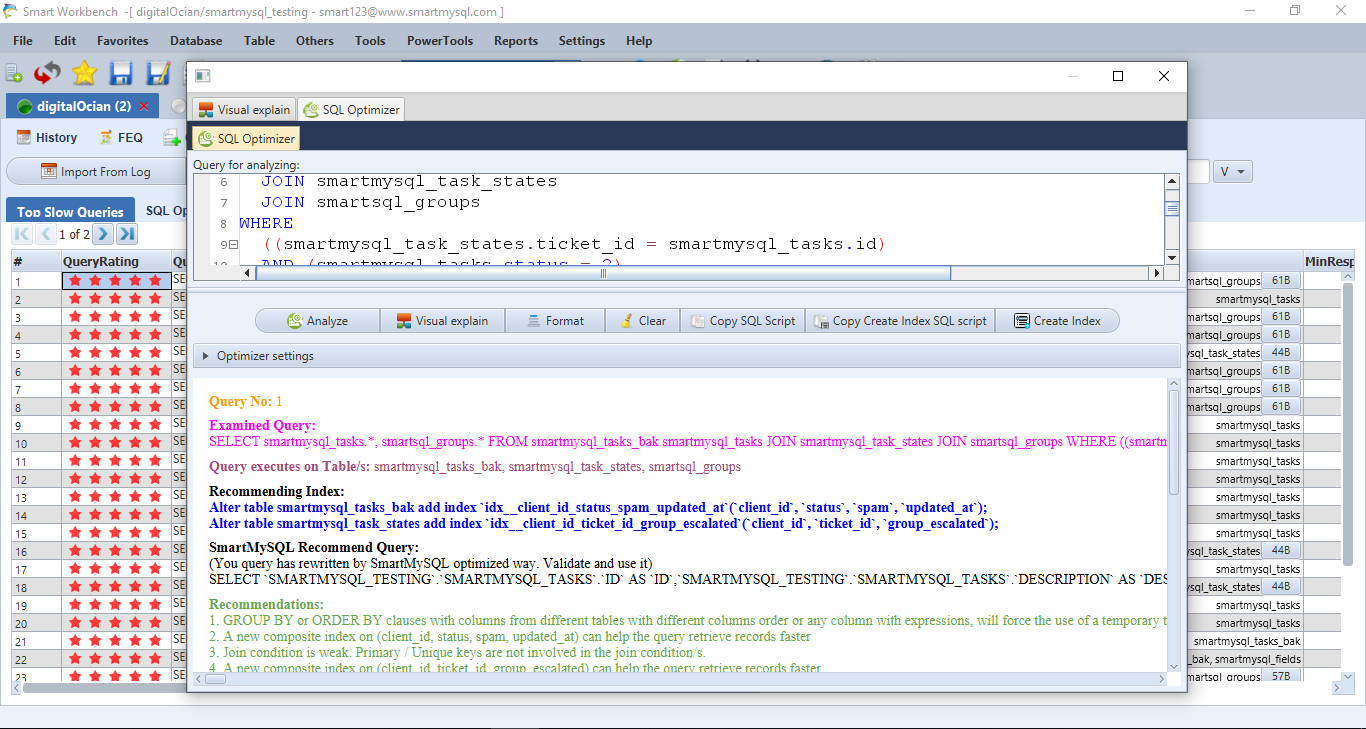

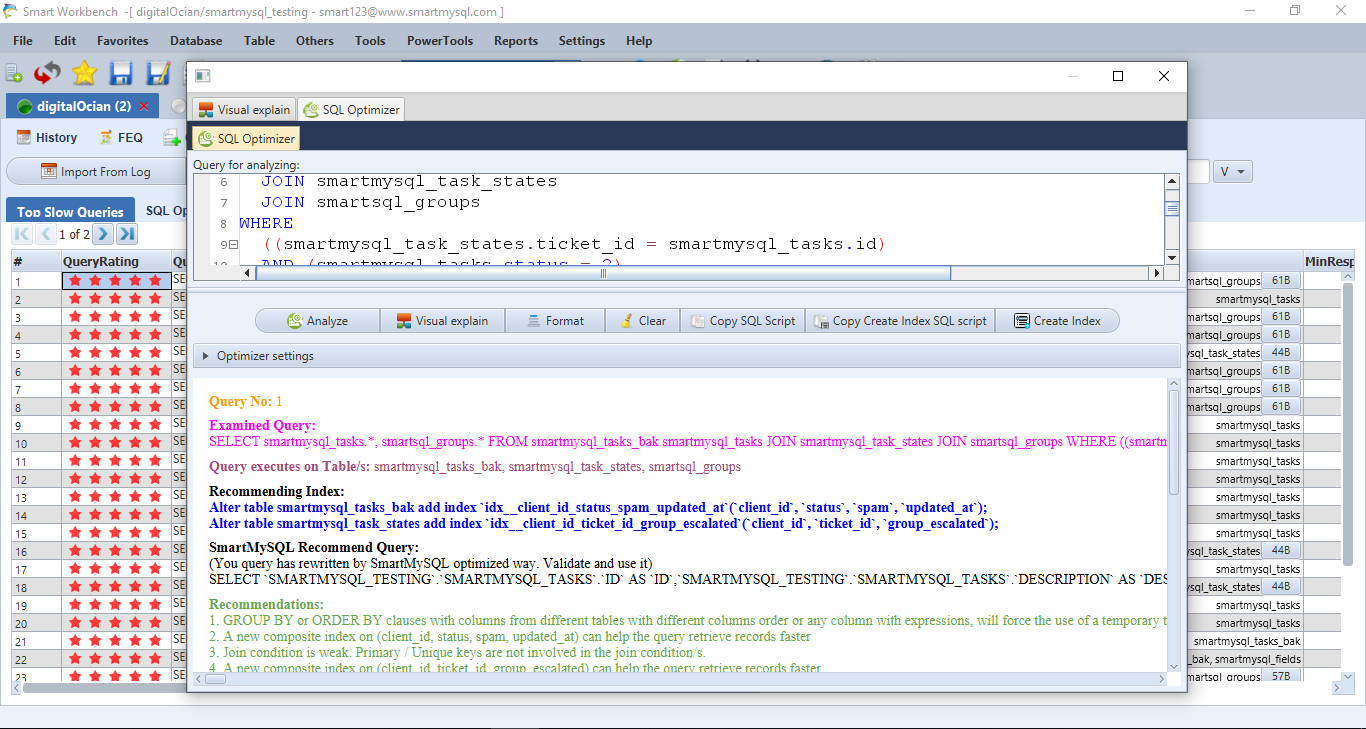

After that, If you click that warning icon(1) or Query Optimizer icon (2) Then it will open the Query Optimizer module.

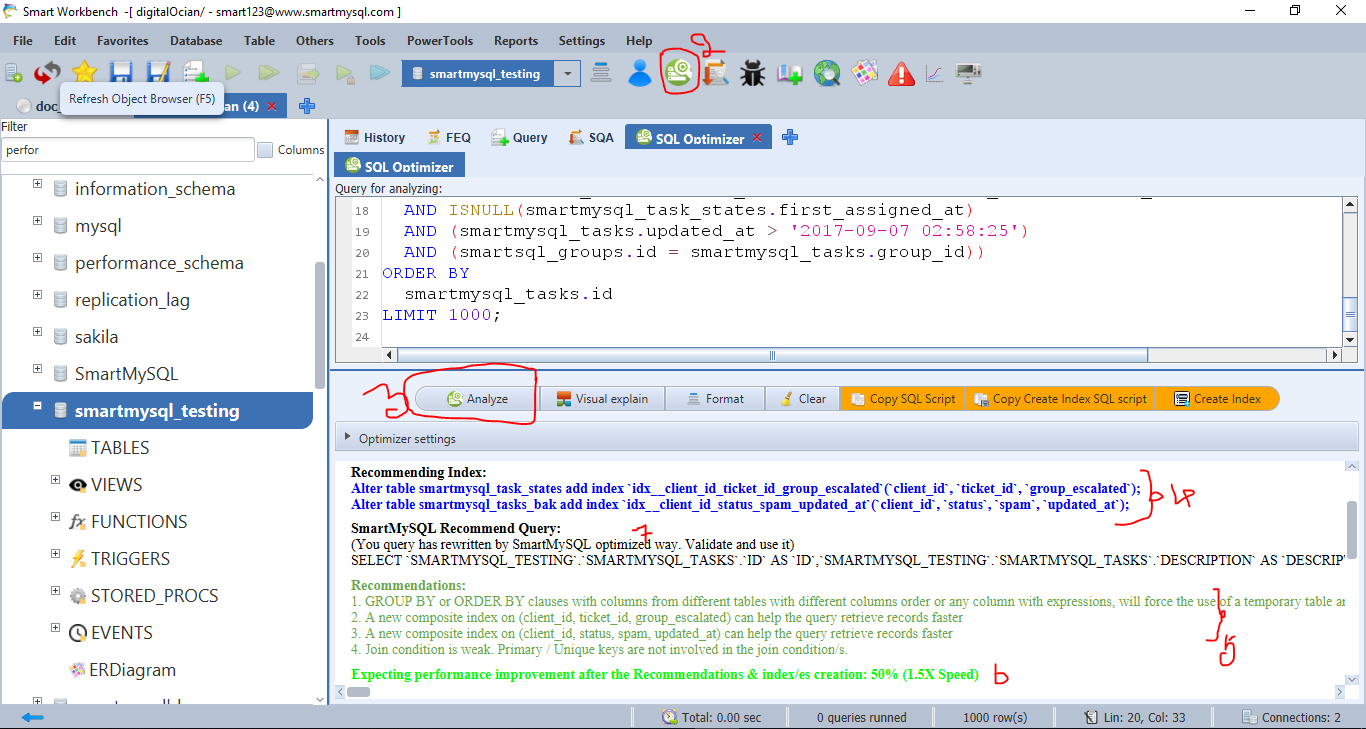

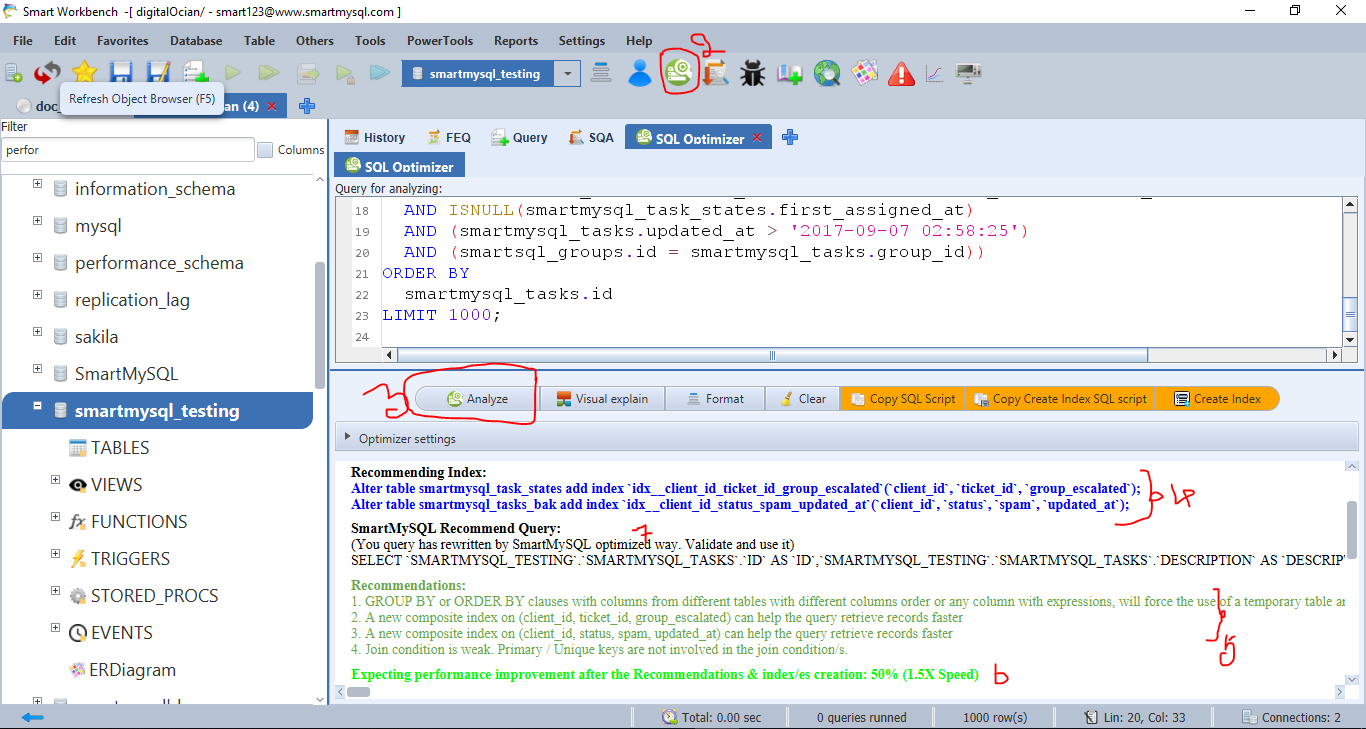

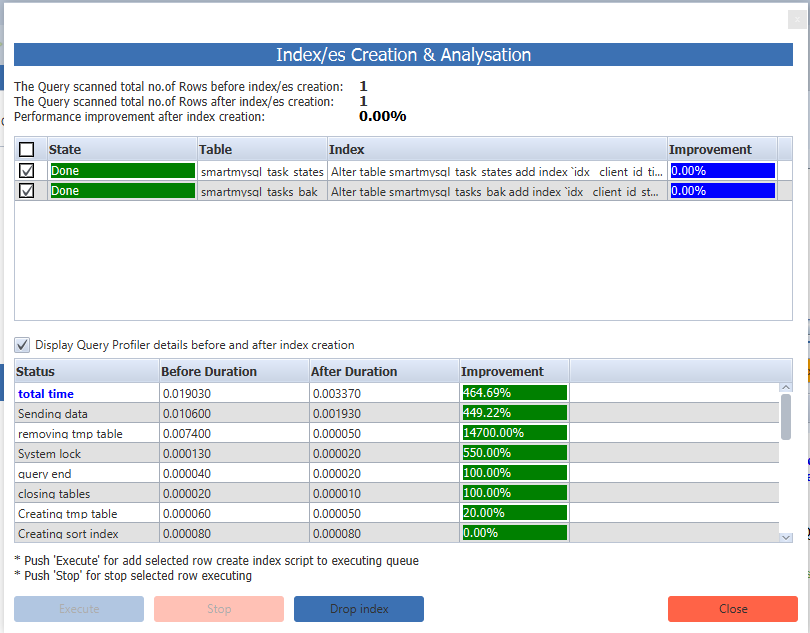

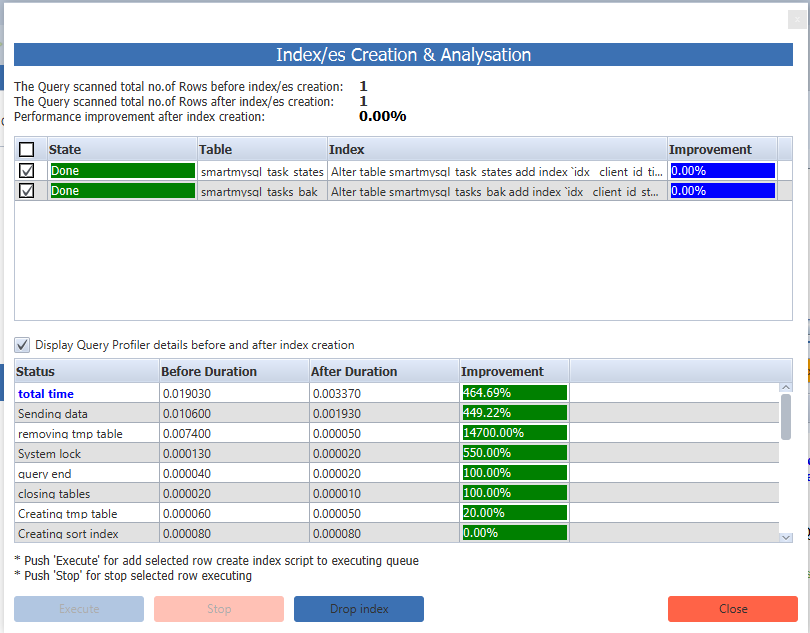

After that, run the analyzer(3) button then it will recommend optimal indexes(4), Query rewrite recommendations(5,7) . After that, if you click the “Create Index” button then it will add recommended indexes and provide a consolidated performance improvement report. The report includes all details such as the percentage of improvement in scanning records, percentage of query execution time improvement for each state of query execution. It is highly useful to identify significant performance improvement before and after Query optimization. It has given more 100X performance most of my SQL Queries.

MySQL Query Optimization Basics

What is the Optimal Index?

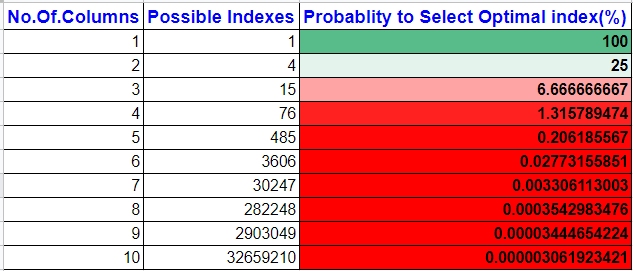

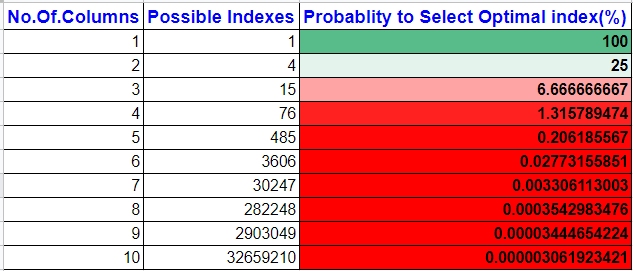

Generally, MySQL Optimizer uses only one index for filter records in most of the cases. There will be N!*(N-1) +N no.of indexes combinations that are available for the query. Here N is no.of unique columns in WHERE, GROUP BY and ORDER BY clauses. All columns belong to the same table and N >=1.

We must select columns based on index type and index’s behavior. There is at best one index per table per query which can scan fewer records to return the result. It will not scan more than 120% of return records. It is nothing but an Optimal index (good index). As a reality, there is a low possibility to select the optimal index using shortcut /easy methods by nonprofessionals.

- If N=1 then 1+1!*(0) = 1+1*(0)=1 possible index

- If N=2 then 2+2!*(1) = 2+1*1*(1)= 4 possible Indexes

- If N=3 then 3+3!*(2) = 3+3*2*(2)=15 possible indexes

- If N=4 then 4+4!*(3) = 76 possible indexes

- If N=5 then 5+5!*(4) = 485 possible indexes.

- If N=6 then 6+6!*(5) = 3606 possible indexes.

This table represents the probability of select optimal index based on the no.of column in WHERE, GROUP BY & ORDER BY clauses. It is gradually reducing the probability of selecting Optimal indexes if the no.of columns are increasing and it almost becomes zero if the query has 5 or more columns in WHERE, GROUP BY & ORDER BY clauses.

Common mistakes in Query Optimization

There are high chances to make mistakes in the query optimization area and missing the good indexes if the query optimized done by nonprofessional. Generally, It supposed to be done by a DB performance expert or DB architect.

In many cases, I have noticed the following a simple strategy while Query Optimization by application team or DBA team. These are bad strategies that give a negative performance.

- Create an index to Select all columns in the same order or reverse order in the query.

- Create an individual index on all columns in the query.

- Create an index on randomly selected columns in the query.

Indexes are very sensitive their behavior will be change based on filter types, logical operator between filter conditions, columns cardinality, apply functions on the filter column, arithmetic operations with filter columns, JOIN condition, Grouping function, GROUP BY clause columns and ORDER BY clause columns order.

Example Queries:

If we see the following three queries follow a different index stages.

Query A

Select * from EMP where Name='JOY' and DEPT='IT';

Query B

Select * from EMP where Name='JOY' or DEPT='IT';

Query C

Select * from Emp Where Name='JOY' and salary >2000 and DEPT='IT';Optimal indexes [Good indexes]

Query A

Alter table EMP add index(NAME,DEPT);

Query B

Alter table EMP add index(NAME); Alter table EMP add index(DEPT);

Query C

Alter table EMP add index(NAME,DEPT,Salary);Query A:

We have to select an index on (Name, DEPT) with the same order for Query A with the respective column’s cardinality. The “Name” column always high cardinality than the “DEPT” column.

Query B

we should create individual indexes on (Name) and (DEPT) column for Query B because the “OR” logical operator is not favor of B tree indexes and MySQL convert query B with unions as below and it needs individual indexes on each column to speed up the query execution time.

Query B

SELECT * from EMP where Name='JOY'

union

SELECT * from EMP where DEPT='IT';Query C

In this Query C example, if the user creates an index on the “SAL” column and the table has more than 50% records salary greater than 2000 then the query will scan 50% records using an index on the “SAL” column to return the result set which is one or two records. It looks better than a full table scan query but It is not a good index for DB to scan your query 50% of total records for returning 2 records. Even it is not the optimal index if you create a composite index on the same order or reverse order. The root cause is the rang filter(SAL>2000) always should be the last position because index conditional push will not work after rang filter. And We have to select other columns and positions based on the column’s cardinality.

SQL queries in real-time applications

In real-time Queries are complex as below and there is high probability do mistakes by nonprofessionals.

SELECT smartmysql_tasks.*,smartsql_groups.*

FROM

smartmysql_tasks_bak smartmysql_tasks

JOIN smartmysql_task_states

JOIN smartsql_groups

WHERE

((smartmysql_task_states.ticket_id = smartmysql_tasks.id)

AND (smartmysql_tasks.status = 2)

AND (smartmysql_task_states.group_escalated = 0)

AND (smartmysql_tasks.deleted = 0)

AND (smartmysql_tasks.spam = 0)

AND (smartmysql_tasks.client_id = 178244)

AND (smartmysql_task_states.client_id = 178244)

AND (smartsql_groups.account_id = 178244 )

AND ((smartmysql_tasks.created_at + INTERVAL smartsql_groups.assign_time SECOND) <= '2017-11-07 02:58:25')

AND ISNULL(smartmysql_task_states.first_assigned_at)

AND (smartmysql_tasks.updated_at > '2017-09-07 02:58:25')

AND (smartsql_groups.id = smartmysql_tasks.group_id))

ORDER BY

smartmysql_tasks.id LIMIT 1000;Common Problems with Bad indexing

If your DB is serving live traffic and frequently triggers production issues such as deadlock, Query locking, Replication lagging, Poor Query response & DB server hanging with High CPU usage, high RAM, high Disk IO usage problems which are slow down DB server performance. Also, these issues cause a DB service outage at pick traffic time

Is your MySQL server has good indexes?

MySQL Sow query log file, sys.statement_analysis View(If enable PS) can save each query total scan rows count and return rows count.

If your queries are scanning just less than 120% of total return records then the queries have good indexes/ optimal indexes. Otherwise, It needs series action. Also, there are more chances to improve performance after adding an optimal index.

In conclusion, I recommend you to use SmartWorkbench query performance tools if your project doesn’t have a DB performance expert/ DB Architect. Also, it helps to cross verify if you are learning MySQL with the tool until you become an expert.

I have provided a link about how to install SmartWorkbench. Please comment below if that any other best tools available in the market with all features in the below comments. Also, you can share your feedback after using SmartWorkbench.